Self-driving cars will one day pull over for police

Self-driving cars have a lot to learn before they're ready to tackle every situation, including how to pay attention to police or road crew hand signals.

The Detroit Newsreported Monday about a California Highway Patrol officer who attempted to stop a Tesla Model S cruising at speeds above the posted limit. Lights and sirens failed to address the speeding, and it wasn't until the officer pulled alongside the Tesla that he saw the driver had fallen nodded off. Tesla's Autopilot system had taken over driving duties.

CHECK OUT: Waymo self-driving car navigates flashing traffic light, follows police signals

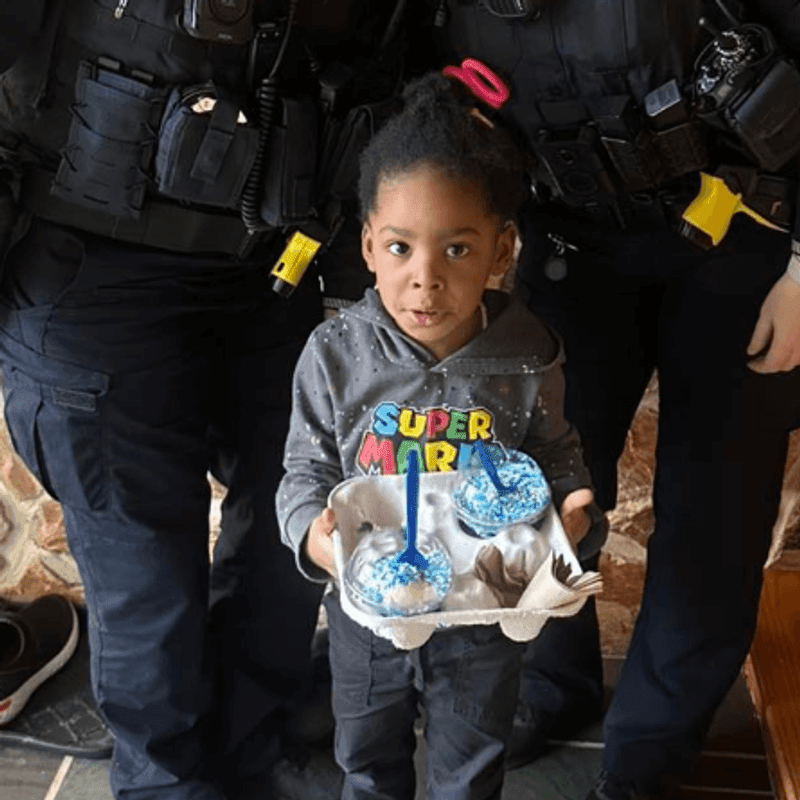

The highway patrol needed to get creative to stop the car. Eventually, units blocked traffic from behind and another police vehicle drove in front of the Tesla until it came to a complete stop. The driver was found to be intoxicated and was charged with driving under the influence.

The situation left police wondering how they will operate in a world of self-driving cars and it opens up numerous questions.

READ THIS: Arizona tallies 21 assault cases against Waymo self-driving cars

Last summer, Michigan state trooper Ken Monroe took Ford engineers on ride alongs while the engineers asked what he'd like to see self-driving cars do in that sort of situation. Of course, flashing lights can mean different things. An officer may just need to pass the self-driving car, or the cop could intend to stop the self-driving car. The state trooper and engineers said the most obvious cue for a self-driving car right now is for the autonomous vehicle to understand how long the officer has been behind it.

Yet the bigger task is to make self-driving cars understand the officer's intentions when they bring the car to a stop. Humans know the drill but machines need to learn what the signals mean.

Read more from Internet Brands Automotive:

- First drive review: 2019 Ram 2500 Power Wagon conquers nearly anything

- 2019 Geneva auto show preview

- 710-horsepower Ferrari F8 Tributo arrives to replace the 488

- 2020 Mercedes-Benz GLC300 gets tougher look, more tech

- First Drive: 2019 Ram 2500 and 3500 heavy duty puts 1,000 lb-ft of torque to work with confidence

![Image for story: 2020 Hyundai Sonata: Upping the ante on midsize sedans [First Look]](/resources/media2/16x9/full/360/center/80/0757332b-e97b-480c-9b65-b974626ec93e-large16x9_2020HyundaiSonataLimited29.jpg)