3 issues could determine who's at fault in Tesla's fatal crash

It's a question that's been on our mind for years: When autonomous cars collide, who's at fault? Sadly, the question is no longer hypothetical, but very, very real following the death of a driver using Tesla's Autopilot self-driving system in his 2015 Model S.

Less than a year ago, several companies at the forefront of autonomous car technology said quite plainly that if a self-driving vehicle crashed while using their software, they would take responsibility. Tesla hasn't admitted its guilt in the May 7 death of Joshua Brown, which occurred in Williston, Florida. However, at least a few experts in the legal and automotive fields believe the automaker could be held liable.

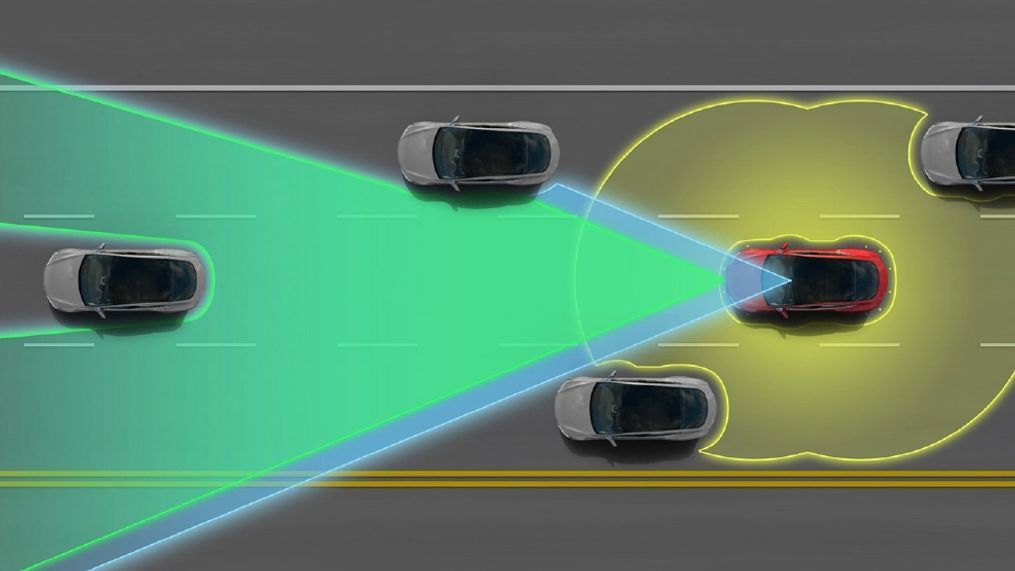

That wouldn't be surprising. In February of this year, the National Highway Traffic Safety Administration declared that Google's autonomous software could be considered a "driver" for legal purposes. Though the impact of NHTSA's decision could be tempered in court, it's bound to carry some weight--particularly in this instance, as Tesla has determined that Autopilot was in control of Mr. Brown's car at the time of the accident.

If the case does go to trial--and as of yet, no suits have been filed--there are three key issues that could have an effect on the court's ruling:

The beta status of Autopilot: In its blog post responding to Mr. Brown's death, Tesla notes that the company "disables Autopilot by default and requires explicit acknowledgement that the system is new technology and still in a public beta phase before it can be enabled". The automaker goes on to say that "Autopilot is getting better all the time, but it is not perfect".

Those sorts of "caveat emptor" statements don't excuse Tesla from liability--in fact, some wonder whether consumers should be allowed to beta-test such potentially dangerous software at all. However, they might reduce the cost of any judgments issued against Tesla.

Warnings and guidelines associated with Autopilot: Tesla goes to great lengths to let owners know that Autopilot is in beta, and the company also provides explicit instructions on how drivers should perform while using the software. Again, from Tesla's blog post:

"When drivers activate Autopilot, the acknowledgment box explains, among other things, that Autopilot 'is an assist feature that requires you to keep your hands on the steering wheel at all times,' and that 'you need to maintain control and responsibility for your vehicle' while using it. Additionally, every time that Autopilot is engaged, the car reminds the driver to 'Always keep your hands on the wheel. Be prepared to take over at any time.' The system also makes frequent checks to ensure that the driver's hands remain on the wheel and provides visual and audible alerts if hands-on is not detected. It then gradually slows down the car until hands-on is detected again."

That's not likely to exonerate Tesla in a courtroom. However, as attorney Gail L. Gottehrer explains to Detroit News, "it's going to be hard to make a compelling argument that Tesla was trying to deceive anybody." That could mitigate court-ordered settlements, too.

Whether Mr. Brown was behaving responsibly: There's no such thing as a completely safe vehicle. Even when every car becomes autonomous, humans will probably still be able to screw up something. We have a demonstrated ability to avoid following directions: in some cases, that tendency manifests itself as mere negligence, in other cases, it's like a death wish. For example, when a driver tried to use Autopilot while sitting in the backseat.

There are reports that Mr. Brown might've been watching a movie at the time of his crash. We think it's a terrible policy to blame the victim, especially before a full investigation has been carried out. However, if Mr. Brown wasn't behaving as Tesla instructed him to, that could have a serious effect on a court's findings.

We'll keep you updated as this groundbreaking case progresses.