Royal photo controversy reignites concerns over AI and trust in digital content

(TND) — The British royal family stepped in a bit of controversy over an edited photo they shared showing Kate, Princess of Wales, and her children.

News agencies retracted the photo, which was provided by the royal family, after noting inconsistencies in the image that indicated digital manipulation.

And Kate followed up Monday with an apology “for any confusion the family photograph we shared yesterday caused.”

She called herself an amateur photographer who experimented with editing.

It’s unclear if the photo of Kate, who has been out of public view while recovering from abdominal surgery, was altered with artificial intelligence.

But it comes amid a larger conversation of public trust in the photos and videos circulating online, with AI fueling concerns over an ever-present and growing threat from misinformation.

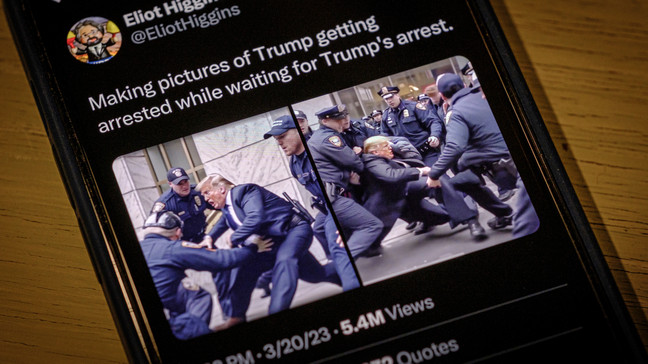

BBC, for example, uncovered AI-generated photos showing former President Donald Trump posing with Black people, apparently intended to convey the sentiment that Trump is popular with Black voters.

And the Federal Communications Commission moved to outlaw AI-generated robocalls after New Hampshire voters got such calls with a fake President Joe Biden discouraging participation in the primary election.

“We cannot look at photos and videos, no matter how realistic they look, and say that is a real moment, frozen in time,” Jeremy Gilbert, a professor at Northwestern University and an expert in AI use in the media, said Monday. “The tools have gotten too good. It is too easy to make something that seems real but might not be.”

Technology companies acknowledged the dangers recently when some major players signed onto a voluntary pact to take “reasonable precautions” to stop AI-generated content that can trick voters.

But Gilbert said he’s worried people just aren’t skeptical enough yet in this new age of AI-generated imagery to truly protect themselves.

“Just as all of us run into situations where people are trying to scam us for information, in this case, I think that we're all going to face being scammed by people trying to supply false information,” he said.

AI can fully generate an image or video. Or AI can be used to alter real photos or videos.

“I think in many ways the AI-altered image or video is a little bit more nefarious,” Gilbert said.

Inserting one or two false words for three to five seconds into an otherwise real 30-second clip of a politician speaking can easily escape the radar of even the most discerning news consumer.

The tech industry and academic researchers are working on ways to identify and mark content as either authentic or AI-altered, but Gilbert said those safeguards will really only help people who intend to do the right thing with the content they create.

And news operations often rely on everyday eyewitnesses to supply photos or videos.

What if the verification tools, or trust badges, don’t work with publicly generated content that’s relayed by news agencies?

“We live in a world of short-form vertical video, passersby recording critical moments,” he said. “I think there's a lot more potential, especially in kind of raw or less edited video, to slip something by that is untrue.”

Even the infamous deepfake videos are easier to make than most people realize, he said. Someone only needs about 20 seconds of video to create one, and most Americans have shared 20 seconds of video of themselves at some point.

Deepfakes are even easier with public figures, given the plethora of video content anyone can access.

Anton Dahbura, an AI expert and the co-director of the Johns Hopkins Institute for Assured Autonomy, previously told The National Desk that AI-generated content is a form of “reality hijacking.”

“It can be very influential if it's a presidential candidate or a celebrity telling you to do something or giving you some thoughts,” Dahbura said. “It's very effective.”

AI-powered voice impersonations, like robocalls, go hand-in-hand with video deepfakes. But robocalls are even easier to create, Dahbura said.

“It's really difficult to imagine the boundaries of damage that can be caused by something like this,” said Dahbura, who called the efforts to contain or mark AI content an “arms race.”

AI, such as OpenAI’s Sora, can even generate video from text inputs.

Gilbert said AI-generated content is sometimes shared via private text messaging threads or WhatsApp groups, where it’s even harder for officials to spot and debunk disinformation.

Trust is vital for media consumers, and Gilbert said there are some simple steps folks can take to ensure they’re not being fooled.

If you see something shocking, unexpected or out of character for the public figure in a video, check other sources. If other outlets aren’t sharing the video, it might not be real.

Don't share things if you’re not sure if something is true, Gilbert said.

Fact-checkers are a good source of information.

And don’t assume something you get from a family member or friend is true if you don’t know where they got it from.

“Just kind of followed the tracks (to the source),” he said.